🎉 Google and OpenAI and Microsoft Oh My!, AI In Your Office, Translating Sperm Whales

Google, Microsoft and OpenAI's Week Full of Demos, Huge Improvements to Model Capabilities, Do You Use AI at Work?, Scientists Decode Animal Communications with AI

Welcome to this week’s edition of AImpulse, a five point summary of the most significant advancements in the world of Artificial Intelligence.

Here’s the pulse on this week’s top stories:

What’s Happening: Google this week kicked off its I/O Developer’s Conference, announcing a wide array of updates across its AI ecosystem — including enhancements across its flagship Gemini model family and a new video generation model to rival OpenAI’s Sora.

Gemini model updates:

New updates to 1.5 Pro include a massive 2M context window extension and enhanced performance in code, logic, and image understanding.

Gemini 1.5 Pro can also utilize the long context to analyze a range media types, including documents, videos, audio, and codebases.

Google announced Gemini 1.5 Flash, a new model optimized for speed and efficiency with a context window of 1M tokens.

Gemma 2, the next generation of Google’s open-source models, is launching in the coming weeks, along with a new vision-language model called PaliGemma.

Gemini Advanced subscribers can soon create customized personas called ‘Gems’ from a simple text description, similar to ChatGPT GPTs.

Video and image model upgrades:

Google revealed a new video model called Veo, capable of generating over 60-second, 1080p resolution videos from text, image, and video prompts.

The new Imagen 3 text-to-image model was also unveiled with better detail, text generation, and natural language understanding than its predecessor.

VideoFX text-to-video tool, featuring storyboard scene-by-scene creation and the ability to add music to generations.

VideoFX is launching in a ‘private preview’ in the U.S. for select creators, while ImageFX (with Imagen 3) is available to try via a waitlist.

Why it matters: Gemini’s already industry-leading context window gets a 2x boost, enabling endless new opportunities to utilize AI with massive amounts of information. Additionally, Sora officially has competition with the impressive Veo demo — but which one will make it to public access first?

What’s Happening: OpenAI unveiled GPT-4o, a new advanced multimodal model that integrates text, vision and audio processing, setting new benchmarks for performance – alongside a slew of new features.

The new model:

GPT-4o provides improved performance across text, vision, audio, coding, and non-English generations, smashing GPT-4T’s performance.

The new model is 50% cheaper to use, has 5x higher rate limits than GPT-4T, and boasts 2x the generation speed of previous models.

The new model was also revealed to be the mysterious ‘im-also-a-good-gpt2-chatbot’ found in the Lmsys Arena last week.

Voice and other upgrades:

New voice capabilities include real-time responses, detecting and responding with emotion, and combining voice with text and vision.

The demo showcased feats like real-time translation, two AI models analyzing a live video, and using voice and vision for tutoring and coding assistance.

OpenAI’s blog detailed advances like 3D generation, font creation, huge improvements to text generation within images, sound effect synthesis, and more.

OpenAI also announced a new ChatGPT desktop app for macOS with a refreshed UI, integrating directly into computer workflows.

Free for everyone:

GPT-4o, GPTs, and features like memory and data analysis are now available to all users, bringing advanced capabilities to the free tier for the first time.

The GPT-4o model is currently rolling out to all users in ChatGPT and via the API, with the new voice capabilities expected to arrive over the coming weeks.

Why it matters: Real-time voice and multimodal capabilities are shifting AI from a tool, to an intelligence we collaborate, learn, and grow with. Additionally, a whole new group of free users (who might’ve been stuck with a lackluster GPT 3.5) are about to get the biggest upgrade of their lives in the form of GPT-4o.

If you missed it, you can rewatch OpenAI’s full demo here.

What’s Happening: Microsoft and LinkedIn just published their Work Trend Index Annual Report, revealing that AI adoption is surging in the workplace — calling 2024 the ‘year AI at work gets real’.

The details:

The report found that use of GenAI has doubled in the last six months, with 75% of knowledge workers using the tech in some capacity.

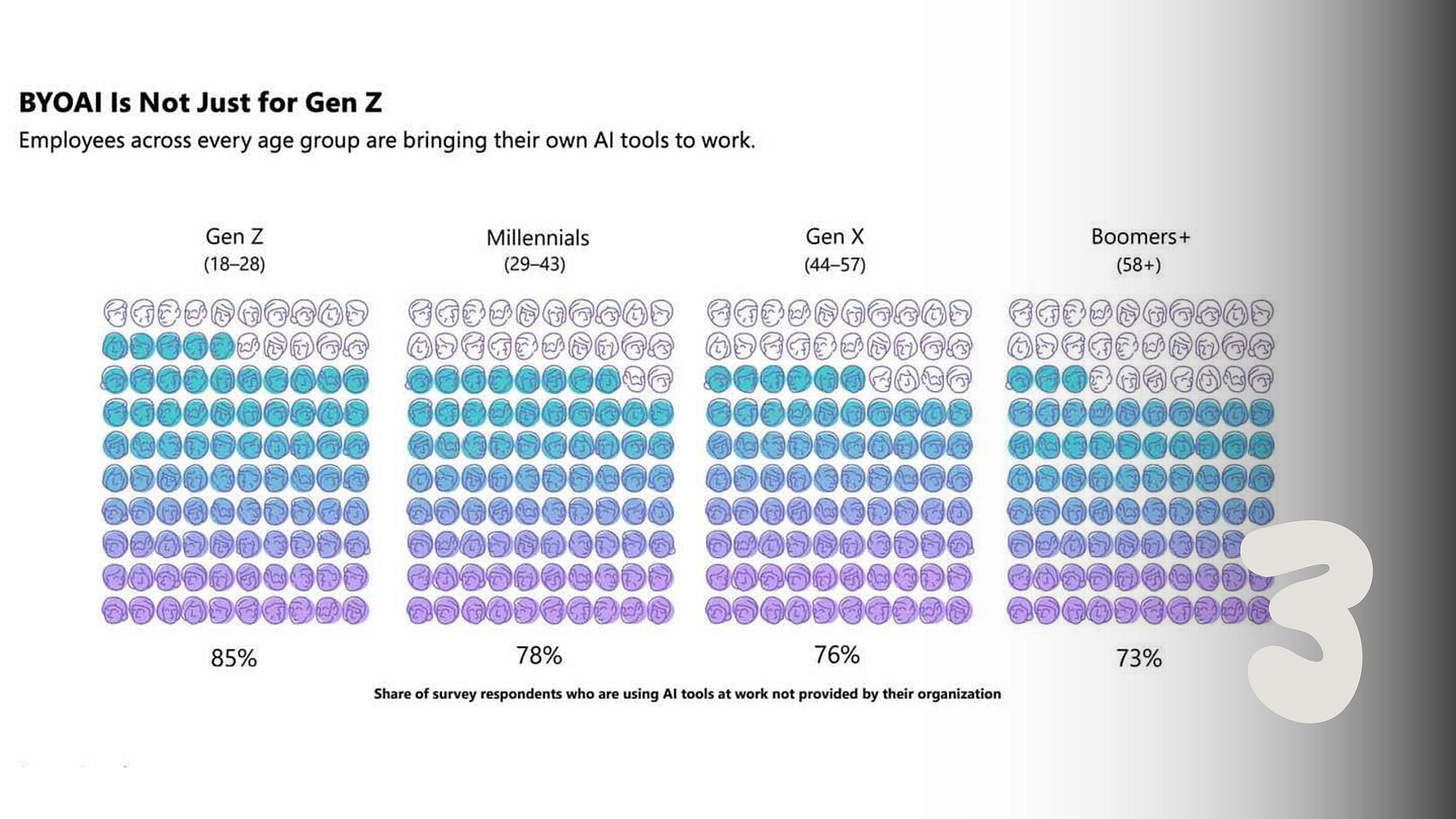

78% of AI users are bringing their own AI to work — with 52% reporting they are reluctant to admit to its use.

66% of leaders wouldn't hire someone without AI skills, and 71% prefer less experienced candidates with AI aptitude over a more experienced one without it.

AI power users reported enhanced productivity, creativity, and job satisfaction compared to skeptical peers.

Why it matters: Employees are rapidly embracing AI, even if their organizations aren't fully prepared for the transition. As AI permeates all sectors, generations, and skillsets, early adopters are gaining a significant advantage, while those who aren't at least exploring the technology are missing out on new productivity accelerants.

What’s Happening: Google showcased its new AI agent project ‘Project Astra’, alongside a slew of updates to infuse AI across search and enable Gemini to reason and take more advanced actions for users.

Progress on AI agents:

Google announced Project Astra, a real-time AI agent prototype that can see, hear, and take actions on a user’s behalf.

The demo showcased a voice assistant responding to what it sees and hears, including code, images, and video — capable of advanced reasoning and recall.

Public access for Astra is expected through the Gemini app later this year.

Google also showed off ‘AI teammates’, agents that can answer questions on emails, meetings, and other data within Workspace.

Live is also rolling out in the coming months, allowing users to speak and converse with Gemini in near real-time.

Search upgrades:

Google Search now features expanded AI Overviews, advanced planning capabilities, and AI-organized search results.

Gemini will be able to execute more complex planning, such as planning, maintaining, and updating trip itineraries.

Search will also receive ‘multi-step reasoning’ capabilities, allowing Gemini to break down questions and speed up research.

Users can also now ask questions with video, allowing Search to analyze visual content and provide helpful AI Overviews.

Why it matters: A new battle for voice assistant supremacy has begun, with OpenAI and Google unveiling remarkable new features within the past week.

Meanwhile, despite rumors of an OpenAI search product and enthusiasm for platforms like Perplexity, dethroning the reigning search giant will be challenging. This is particularly true as they seamlessly integrate advanced AI across their entire ecosystem.

What’s Happening: If you’re still with me, this is a fun one. Researchers have used AI to decode sperm whale communication, revealing complex language patterns.

The Details:

Sperm whales use morse-code-like clicks to communicate.

MIT and Project CETI analyzed 9,000 whale recordings with AI.

Findings show whales form "meaningful units" similar to human language.

Why it Matters: Certain whale species are highly social, and they travel around as tight-knit family units. When a mother leaves to find food, she’ll first communicate with a family member to make sure they’ll protect her kids from giant squid, killer whales, and other threats. The insights from MIT’s AI experiment add new wrinkles in the debate over how language affects intellect — and prove that humans are far from the only highly intelligent creatures on earth.