🎉 OpenAI's Sora Is Here, Google's Quantum Chip, xAI's Aurora Image Generator, Microsoft CoPilot Vision

OpenAI Launches Sora, Google's Quantum Breakthrough, xAI Launches Beta Image Generator, Microsoft Launches CoPilot Product "Vision"

Welcome to this week’s edition of AImpulse, a four point summary of the most significant advancements in the world of Artificial Intelligence followed by a cool new AI tool I’m trying out this week.

Here’s the pulse on this week’s top stories:

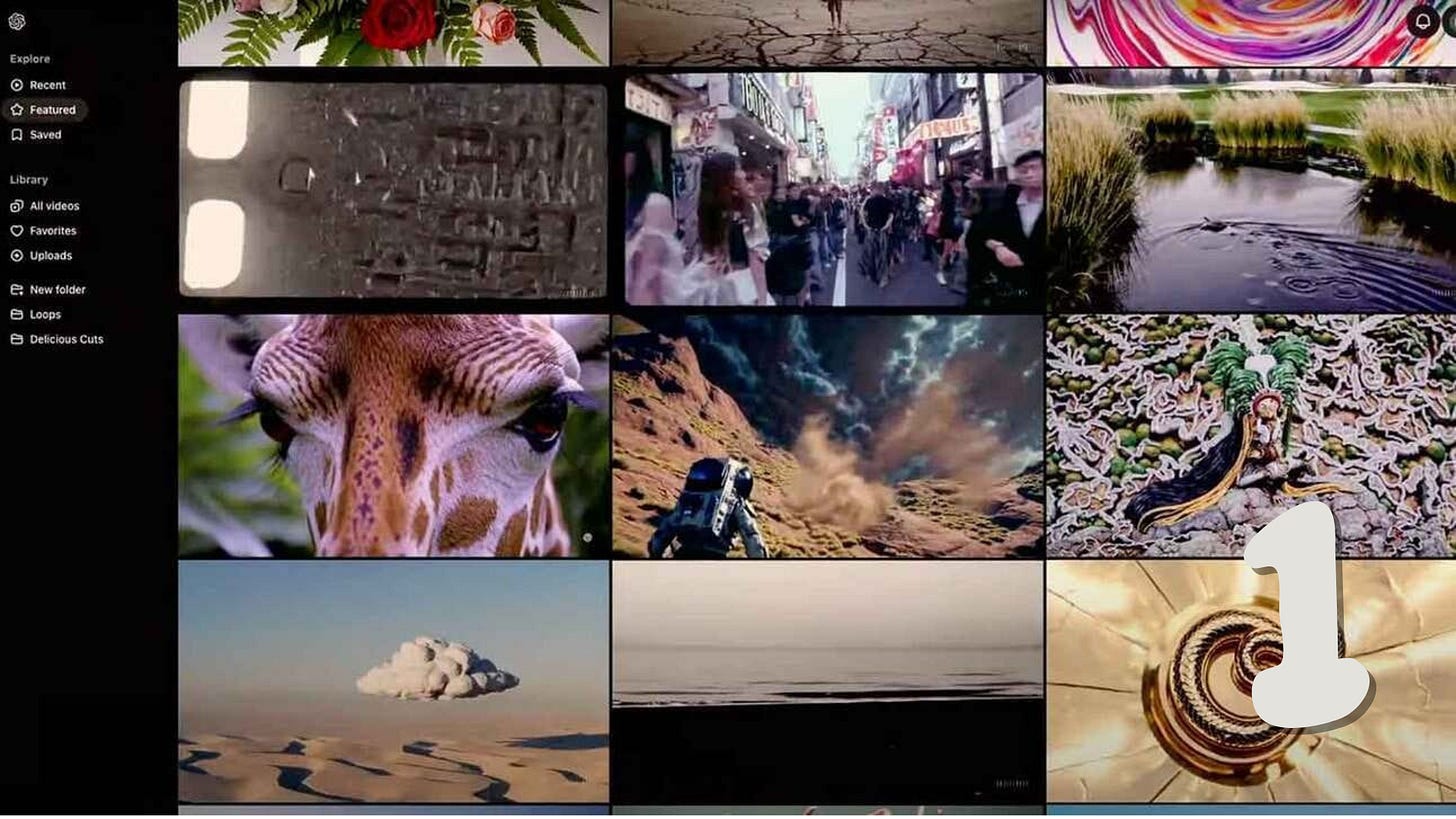

What’s Happening: OpenAI just officially launched Sora, the company’s long-awaited AI video generation model, which is now available to ChatGPT Plus and Pro subscribers through a dedicated platform with several new editing and creative features.

The details:

Sora can create up to 20-second outputs in various aspect ratios, and the new ‘Turbo’ model significantly reduces generation times compared to previous reports.

Sora’s web platform allows users to organize and view generated videos, as well as view other users’ prompts and featured content for inspiration.

Powerful creative tools include Remix for scene editing, Storyboard for stitching multiple outputs together, Blend, Loop, and Style presets.

Sora is available today to ChatGPT subscribers, with unlimited generations, higher resolution, and watermark removal available with the $200/m Pro plan.

OpenAI is restricting content with real people, minors, or copyrighted material, with only a ‘subset’ of users allowed to upload real people as input initially.

The rollout will also exclude the EU, UK, and several other territories due to regulatory concerns.

Why it matters: Sora is finally here, and while there will be arguments over its quality compared to rivals, OpenAI's reach and user base are unmatched for getting this type of tool into the public’s hands. Millions of AI ‘normies’ are about to have their first high-level video experience, opening a new world of creativity for the average user.

What’s Happening: Google just revealed Willow, a new quantum computing chip that achieves major performance breakthroughs in error correction and computation speed, demonstrating the potential for future useful, large-scale quantum computers.

The details:

Willow achieved a milestone in reducing errors exponentially as more qubits were added — a critical issue that stumped researchers for three decades.

Willow also completed a computation in under five minutes that would take one of today's fastest supercomputers 10 septillion (10^25) years.

Willow utilizes 105 qubits and can maintain the quantum state for nearly twice as long as previous designs — making it more reliable for complex calculations.

The chip was manufactured at Google's new quantum fabrication facility in Santa Barbara, one of only a handful of such specialized facilities worldwide.

Why it matters: Willow takes quantum computers a step toward the practical rather than just theoretical, proving we can overcome some of the field's biggest hurdles. The ability to harness quantum tech would revolutionize everything — but translating today's breakthrough into real-world applications will take time and further advances.

What’s Happening: X briefly rolled out Aurora, a new AI image generator integrated with Grok that appeared to produce more photorealistic images than the previous Flux model, though the feature was pulled after just a few hours of testing.

The details:

Aurora showed significant improvements compared to Grok’s integrated Flux model, particularly with landscapes, still-life images, and human photorealism.

The model also appeared to have minimal content restrictions, allowing the creation of copyrighted characters and public figures.

Elon Musk called the tease a "beta version" of Aurora that will improve quickly in a reply on X.

X Developer co-lead Chris Park also revealed that Grok 3 ‘is coming,’ taking aim at OpenAI and Sam Altman in the announcement on X.

xAI’s Grok became available across the X platform last week, allowing free-tier users up to 10 messages every two hours.

Why it matters: Although only live briefly, Aurora looked to be an extremely powerful new image model — with xAI seemingly deciding to create their own top-tier generator instead of relying on integrations like Flux long-term. It was also wild to see the lack of restrictions, which tracks with Elon’s vision but could enter some murky legal areas.

What’s Happening: Microsoft just launched its Copilot Vision feature, which allows its assistant to see and interact with web pages a user is browsing in Edge in real-time — now available in preview to a limited number of its Pro user base.

The details:

Vision integrates directly into Edge's browser interface, allowing Copilot to analyze text and images on approved websites when enabled by users.

The feature can assist with tasks like shopping comparisons, recipe interpretation, and game strategy while browsing supported sites.

Microsoft previously revealed the feature in October alongside other Copilot upgrades, including voice and reasoning capabilities.

Microsoft emphasized privacy with Vision, making it opt-in only — along with automatic deletion of voice and context data after the end of a session.

Why it matters: The addition of real-time context and the ability for AI to ‘see‘ everything in your browser makes for a wild new form of AI that we’re likely to start seeing a lot more of in 2025.

This Week’s Tool: OpenAI’s newly launched Sora AI video generator allows you to turn your text descriptions into realistic videos without cameras, actors, or editing software.

Step-by-step:

Access Sora (accessible via a paid ChatGPT account).

Write a detailed prompt describing your desired video (e.g., "A majestic albino jaguar drinks from a crystal-clear stream.")

Choose your settings: aspect ratio (16:9, 1:1, or 9:16), resolution (480p to 1080p), and duration (5-20s).

Generate and enhance your video using remix, re-cut, or blend features.

Pro tip: Test your concepts with shorter durations and lower resolutions first, then upgrade settings for your final version.