🎉 Apple Intelligence, AI Talks to Dogs, Long Form Video Gen, Lifting ChatGPT's Hood

A Deep Dive into Everything Apple, Communicate with your Dog, Text to Long Form Video, Byte Dance Chip Politics, ChatGPT's Inner Workings

Welcome to this week’s edition of AImpulse, a five point summary of the most significant advancements in the world of Artificial Intelligence.

Here’s the pulse on this week’s top stories:

What’s Happening: Apple just kicked off its hotly-anticipated WWDC event, unveiling the company’s new ‘Apple Intelligence’ AI strategy, a partnership with OpenAI, and a flurry of new AI integrations and features coming to iOS 18, iPadOS, and macOS 15.

Siri Upgrades

A next-gen Siri will converse more naturally, remember context across requests, and accomplish more complex tasks by better understanding both voice and text.

Siri also gains ‘onscreen awareness’, with the ability to take actions and utilize on-device info to better tailor requests to the individual user.

New AI Features

New AI writing tools built into apps like Mail, Messages, and Notes will allow users to auto-generate and edit text.

Mail will utilize AI to better organize and surface content in inboxes, while Notes and Phone gain new audio transcription and summarization capabilities.

AI-crafted ‘Genmojis’ enable personalized text-to-image emojis, and a new "Image Playground" feature introduces an image generation tool from prompts.

Photos get more conversational search abilities, the ability to create photo ‘stories’, and new editing tools.

Privacy

A focus of the AI reveal was privacy — with new features leveraging on-device processing when possible and Private Cloud Compute for more complex tasks.

Private Cloud Compute (PCC) is Apple’s new intelligence system specifically for private AI processing on the cloud.

The new AI features will be opt-in, so users will not be forced to adopt them.

For a more technical overview of PCC please check out their “State of the Union” from WWDC here.

OpenAI Integration

The OpenAI partnership will allow Siri to leverage ChatGPT/GPT-4o when needed for more complex questions.

OpenAI’s blog also outlined additional ChatGPT tools like image generation and document understanding embedded into the new OS.

Why it matters: An upgraded, more capable Siri integrated directly with ChatGPT will make voice assistance finally useful — and onboard millions of users to the start of the on-device AI agent boom. What an exciting time to be alive.

Check out some of the best demo videos from WWDC here.

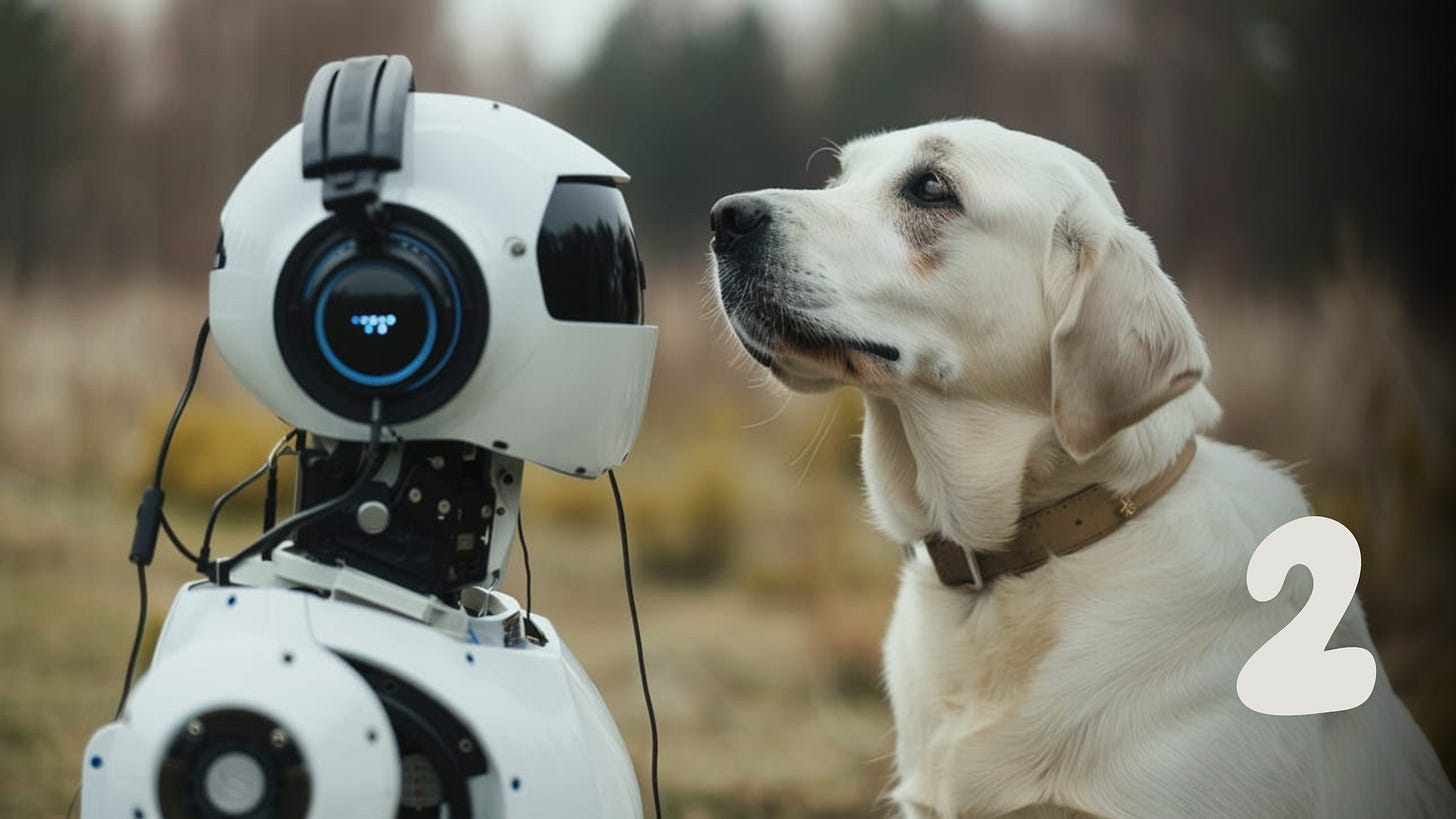

What’s Happening: A new study from the University of Michigan leveraged AI models trained on human speech to decode the meaning behind dog noises — identifying details like breed, age, gender, and emotional state with 70% accuracy.

The details:

Researchers gathered vocalizations from 74 dogs of varying breeds, ages, and situational contexts.

The noises were inputted into an AI model originally designed to analyze human voices, trained on 960 hours of speech, and fine-tuned for dogs.

The AI was able to predict individual dogs from barks, distinguish dog breed and gender, and match barks to emotional context like play and aggression with 70% accuracy.

Why it matters: AI is not only bridging the language gap for humans across the globe — but also potentially across species as well. Communicating with other intelligent animals (or at least better understanding them) seems like a skill issue that’s going to be solved sooner rather than later.

What’s Happening: Chinese tech firm Kuaishou just introduced KLING, a new text-to-video AI model capable of generating high-quality videos up to 2 minutes long with outputs that appear to rival OpenAI’s still-unreleased Sora.

The details:

KLING can produce videos at 1080p resolution with a maximum length of 2 minutes, surpassing the 1-minute Sora videos demoed by OpenAI.

KLING’s demos include realistic outputs like a man eating noodles and scenic shots, as well as surreal clips like animals in clothes.

The model uses a 3D space-time attention system to simulate complex motion and physical interactions that better mimic the real world.

The model is currently available to Chinese-based users as a public demo on the KWAI iOS app.

Why it matters: These generations are even more mind-blowing when you consider that Will Smith’s spaghetti-eating abomination was barely a year ago. With users still anxiously waiting for the public release of Sora, other competitors are stepping in — and the AI video landscape looks like it’s about to heat up in a major way.

Editor Note: If you’re looking for a competing US-brand in the long form video generation space, check out Luma Labs, capable of generating 120sec long clips with consistent characters here.

What’s Happening: TikTok parent company ByteDance is renting advanced Nvidia AI chips and using them on U.S. soil, exploiting a loophole to sidestep restrictions on China’s AI chip exports.

The details:

Due to national security concerns, the U.S. government prohibits Nvidia from selling AI chips like the A100 and H100 directly to Chinese companies.

The restrictions don't prevent Chinese firms from renting chips for use within the U.S. — ByteDance is allegedly leasing servers with chips from Oracle.

ByteDance reportedly had access to over 1,500 H100 chips and several thousand A100s last month through the Oracle deal.

Other Chinese giants like Alibaba and Tencent are also reportedly exploring similar options, either renting from U.S. providers or setting up US data centers.

Why it matters: The AI race between the U.S. and China is only escalating — and it appears major players are going to get AI chips by any means necessary. While the U.S. tries to stall its rival’s progress with restrictions, it feels like a game of whack-a-mole that won’t stop China from reaching its AI goals.

What’s Happening: OpenAI just released a new paper detailing a method for reverse engineering concepts learned by AI models and better understanding ChatGPT’s inner workings.

The details:

The paper was authored by members of the recently disbanded superalignment team, including Ilya Sutskever and Jan Leike.

‘Scaling and Evaluating Sparse Autoencoders’ outlines a technique to ID patterns representing specific concepts inside GPT-4.

By using an additional model to probe the larger model, researchers found a way to extract millions of activity patterns for further exploration.

OpenAI released open-source code and a visualization tool, allowing others to explore how different words and phrases activate concepts within models.

Why it matters: Much like Anthropic’s recent “Golden Gate Claude” and corresponding research, AI firms are still working to understand what’s truly going on underneath the hood. Cracking AI’s black box would be a big step towards better safety, tuning, and controllability of rapidly advancing models.